- Sort Blog:

- All

- Book Reviews

- EA Rotterdam

- Essays

- Flotes

- Goals

- Links

- Series

- Short Stories

- Uncategorized

For We Are Many

This was part 2 in a 3 part series in the Bobiverse. See my short analysis of part 1 here. And I think I should skip this one and do the final analysis when part 3 is here (because again there are many storylines that are just wide open).

The Blank Slate

The Blank Slate by Steven Pinker takes a critical look at our human brain and argues that it’s NOT a blank slate. Pinker (also known from The Better Angels of Our Nature and Enlightenment Now), combines his skills of storytelling and deep (and wide) knowledge to put down a convincing argument for how the brain/mind? interacts with our environment.

Here is a short summary from the book:

One of the world’s leading experts on language and the mind explores the idea of human nature and its moral, emotional, and political colourings. With characteristic wit, lucidity, and insight, Pinker argues that the dogma that the mind has no innate traits-a doctrine held by many intellectuals during the past century-denies our common humanity and our individual preferences, replaces objective analyses of social problems with feel-good slogans, and distorts our understanding of politics, violence, parenting, and the arts. Injecting calm and rationality into debates that are notorious for axe-grinding and mud-slinging, Pinker shows the importance of an honest acknowledgement of human nature based on science and common sense.

Here is the table of contents:

- pt. 1. The blank slate, the noble savage, and the ghost in the machine

- The official theory

- Silly putty

- The last wall to fall

- Culture vultures

- The slate’s last stand

- pt. 2. Fear and loathing

- Political scientists

- The holy trinity

- pt. 3. Human nature with a human face

- The fear of inequality

- The fear of imperfectibility

- The fear of determinism

- The fear of nihilism

- pt 4. Know thyself

- In touch with reality

- Out of our depths

- The many roots of our suffering

- The sanctimonious animal

- pt. 5. Hot buttons

- Politics

- Violence

- Gender

- Children

- The arts

- pt. 6. The voice of the species

The chapter I want to highlight/make some observations about is the one on children. It is this chapter where I was most surprised by the evidence.

In psychology (what I studied) you learn about the 50/50ish division between genes and environment (nature vs nurture) and of course that there are interactions between both.

An example would be your length. Your genetic make-up determines for the most part how tall you will become. But if you’re malnourished whilst growing up, you will come up a few centimetres short in the end.

Or think of your temperament. You may be a stoic, or a hot head. And during the years of your life you will learn to deal with how you’re wired. And some do better than others. They learn better techniques, or they might be unlucky in their childhood.

And this is where Pinker, armed with data, made me think about things a bit different than before. He argues that your family and all the experiences shaped by your parents (the ‘shared’ experiences you could have had with siblings) don’t matter at all. And they don’t matter for the variance of outcomes you will have.

I.e. if we’re looking at your expression of your temperament or the chance that you will end up in jail, then it’s explained for about 50% by your genes, and 50% by your unique experiences.

Unique experiences? The friends you have and smoked weed with when you were still 15. The tv shows you watched in your bedroom. The teacher who took you under his wing. All things that are (almost) completely outside of the control of your parents.

But, but… parents should have an influence, right? I also can’t shake the feeling that what a parent does should have an influence. But when looking at (identical) twins who grew up in different families, or looking at different families in similar circumstances, and many other configurations, Pinker concludes that the shared experiences really count for nothing.

Looking at this in another way, you can say that there may just be many more (influential) unique experiences. Say for instance that your experience of sex(uality) should be formed by different factors. There are of course the genes. But what would have more influence, parents telling you about the birds and the bees, versus your first good/bad/average very intimate evening. Or the stories your peer group tell you. And the expectation that may differ from class to class, from peer group to peer group.

What if you can still shape this environment? As a parent can’t you choose where your kid grows up and influence it in this way? I guess that may still be true. Still, you’re only marginally improving the environment (which accounts for 50%) with still so much variation of unique experiences.

Say you choose the best school, but because your kid is now surrounded by other kids who are smarter he becomes very insecure and gets bullied. Or you move to the countryside because you believe it’s safer and he gets hit by a car in the middle of nowhere.

Ok, enough rambling, Pinker does end the chapter in a good way. You can see your kids not as a blank slate you need to shape and fill. No, see your kids as your friends. As (little) people you want to hang out with. To enjoy your time together (they have half your genes, so you might get along great). And yes, don’t be a bad parent, why would you want to even consider that. Be a good parent just because (and not for their outcomes) it’s the moral thing to do.

God Is Not Great

God Is Not Great by Christopher Hitchens takes reason to religion. It’s a deep dive into a terribly important topic. Not only because it has shaped (and for the foreseeable future will shape) our lives. Whilst some will argue that we’re already living in the next Enlightenment (or hope so, Steven Pinker), Christopher Hitchens is more militant and political, if we need an Enlightenment, he will be one of the horsemen of it.

Here are some observations I had on the chapters:

- Putting it Mildly

- “There still remains four irreducible objections to religious faith: that it wholly misrepresents the origins of man and the cosmos, that because of this original error it manages to combine the maximum of servility with the maximum of solipsism, that it is both the result and the cause of dangerous sexual repression, and that it is ultimately grounded on wish-thinking.”

- Religion has been seen as attractive because we’re only partly rational

- “… those who offer false consolation are false friends”

- “Religion is man-made” (emphasis is on the man part)

- “Religion poisons everything”

- Religion Kills

- “It must seek to interfere with the lives of nonbelievers, or heretics, or adherents of other faiths. It may speak about the bliss of the next world, but it wants power in this one”

- Since 2001 Hitchens sees religion (again) taking a larger role in public life (crazy Americans)

- Religious wars/attacks between the West and Middle-East are two sides of the same coin (and feed/strengthen each other)

- “In this respect, religion is not unlike racism. One version of it inspires and provokes the other”

- Why Heaven Hates Ham

- Religion, Jews/Muslims, hate pigs, but why. (The even banned Animal Farm by George Orwell)

- Pigs are intelligent, share lots of our DNA, you can use almost every part

- The ban is not rationally (even way back when), archaeology shows that other people ate them without problems (trichinosis)

- It’s just superstition that should have ended when it started

- A Note on Health

- Smallpox eradication was harmed by false rumours spread by Muslims (see the same happening now in New York with measles, again wtf)

- The damage from this is incalculable

- Religions believe that the holy text should be enough to prevent people from doing the bad things (/protect them in a way?), of course, that is not how life works and in many cases, the victims even didn’t do anything morally wrong

- “The attitude of religion to medicine, like the attitude of religion to science, is always necessarily problematic and very often necessarily hostile”

- Two major religions in Africa, “believe that the cure is much worse than the disease”

- Child rape and torture is suspected to be so bad that there are maybe more kids than not (in religious schools) that we molested

- Three conclusions up to this point:

- Religion and the churches are manufactured

- Ethics and morality are quite independent of faith, and cannot be derived from it

- religion is – because it claims a special divine exemption for its practices and beliefs – not just amoral but immoral

- Religion actually looks forward to the day that it all ends. This is captured in a guilty joy (schadenfreude). They hope of course that they personally will be spared

- The Metaphysical Claims of Religion Are False

- “Religion comes from the period of human prehistory where nobody had the smallest idea what was going on”

- Ockham’s razor is one of the thinking tools to show that religion/God is not a good explanation for science

- Arguments from Design

- The paradox at the centre of the large religions concerns both feeling submissive and being rigorous and superior to others

- The arguments from design concern the small and large. And they are centred not around inquiry but on faith alone (e.g. finding bones of dinosaurs is there to test your faith)

- The often used example of the eye is very mistaken. It has been developed/’made’ by evolution over 40 times and in us human is quite inefficient (backwards and upside down)

- Our DNA has lots of ‘junk’ left behind from everything between us and the bacteria we came from

- A theory (also a word that is mislabelled) becomes accepted when it can make accurate predictions about things or events that have not yet been discovered or have not yet occurred

- “…98 per cent of all the species that have ever appeared on earth have lapsed into extinction”

- Evolution is not guided and that we’re here is the result of a lot of luck/bottlenecks

- Fun fact: “… a cow is closer in family to a whale than to a horse”

- Hitchens mentions The End of Faith by Sam Harris

- Revelation (Old Testament)

- Again a focus on man and the focus on humans

- The ten commandments say nothing about the protection of children, slavery, rape, genocide

- Shortly after that, God tells Moses about when to buy and sell slaves (so, yeah), and the rules for selling daughters

- “… none of the gruesome, disordered events described in Exodus ever took place”

- “Freud made the obvious point that religion suffered from one incurable deficiency: it was too clearly derived from our own desire to escape from or survive death”

- The context of the bible is local! (no mention of Inca’s or other peoples)

- The New Testament

- The four gospels describe the events very differently and there is disagreement on mythical elements (including the crucifixion and resurrection of Jesus)

- “the word translated as ‘virgin’, namely almah means only ‘a young woman'”

- Jesus had four brothers and some sisters (Matthew, 13:55-57)

- (quoting C.S. Lewis, a “most popular Christian apologist”, speaking about Jesus): “Now, unless the speaker is God, this is really so preposterous as to be comic.”

- “The case for the biblical consistency or authenticity or ‘inspiration’ has been in tatters for some time, and the rents and tears only become more obvious with better research, and thus no ‘revelation’ can be derived from that quarter. So, then, let the advocates and partisans of religion rely on faith alone, and let them be brave enough to admit that this is what they are doing.”

- The Koran

- “… relates facts about extremely tedious local quarrels.”

- The Koran can only be read as the original and translations are not as true as the original revealed text

- “There has never been an attempt in any age to challenge or even investigate the claims of Islam that has not been met with extremely harsh and swift repression.”

- Almost as soon as it was established, after Muhammad died, quarrels over leadership erupted and started the Sunni and Shia divide.

- Two quotes (as a rebuttal to Islam’s benign tolerance): “Nobody who dies and finds good from Allah (in the hereafter) would wish to come back to this world even if he were given the whole world and whatever is in it, except the martyr who, on seeing the superiority of martyrdom, would like to come back to the world and be killed again.” and “God will not forgive those who serve other gods beside Him; but he will forgive whom He will for other sins. He that serves other gods besides God is guilty of a heinous sin.”

- The Tawdriness of the Miraculous

- “… the age of miracles seems to lie somewhere in our past.”

- And this could be welcomed but faith is apparently not enough on its own and we get some very strange attempts at describing miracles (which of course are then being discredited).

- Houdini and others have shown us in many ways that miracles are smart ways of showing things that comply with the laws of nature.

- “Miracles, in any case, do not vindicate the truth of the religion that practices them.”

- Many people believe in miracle cures and therefore don’t undergo normal treatment.

- “… many people will die needlessly as a result of this phoney and contemptible ‘miracle’.”

- “The evidence (miracles) for faith, then, seems to leave faith looking even weaker than it would if it stood, alone and unsupported, all by itself.”

- We have many other things to wonder about, the atom, quarks, black holes, morning sun in spring, etc.

- Religion’s Corrupt Beginnings

- Here Hitchens takes a closer look at the origin of religion, for this he uses cargo cults and the Mormons as examples from last/this century.

- “Is it not true that all religions down the ages have shown a keen interest in the amassment of material goods in the real world?”

- American evangelism is a heartless con run by no good men (also see Derren Brown – Miracles for Sale)

- “… twenty-five thousand words of the Book of Mormon are taken directly from the Old Testament.” (2000 new testament)

- What if religion is the outcome of believing in believing, having faith, which in ancient time was equal to higher morale and thus higher chances of surviving?

- How Religion Ends

- A short chapter on religions that are no longer here, how did they stop (not a clear/actionable reason, except no real following/end of world event didn’t happen).

- Does Religion Make People Behave Better

- Religion is often equated to morality, to learning how to live a good life.

- But being a moral person and being religious doesn’t go hand in hand, in many cases, the very ‘holy’ people are the same who abuse kids. And there are great atheists (even before our mainstream religions existed) that did great moral things.

- The slave trade was blessed by the church (and Islam), “the most devout Christians made the most savage slaveholders.”

- “No supernatural force was required to make the case against racism.”

- “… there is no country in the world today where slavery is still practised where the justification of it is not derived from the Koran.”

- The emancipation is also one where religion was holding it back instead of helping, and yes that is referring to the backward beliefs of Gandhi

- “When priests go bad, they go very bad indeed, and commit crimes that would make the average sinner pale. One might prefer to attribute this to sexual repression than to the actual doctrines preached, but then one of the actual doctrines preached is sexual repression…”

- The church has also been bad at saying sorry for the sins of the past and has lacked in denouncing people who do bad acts in the name of faith.

- There Is No “Eastern” Solution

- Even in the east, you are not safe. Here gurus go after the possessions of famous/rich/average people, engage in orgies and strive for power, just like everywhere else.

- Even the Hindu and Buddhists have a history of murderers and violence.

- The best example is the Buddhists who raped China in honour of Japan.

- Religion as an Original Sin

- “There are indeed several ways in which religion is not just amoral, but positively immoral.”

- “These include: presenting a false picture of the world to the innocent and the credulous, the doctrine of blood sacrifice, atonement, eternal reward and/or punishment, the imposition of impossible tasks and rules

- “The essential principle of totalitarianism is to make laws that are impossible to obey” (in regard to being responsible for sins from before your time).”

- “… a spiritual police state.”

- Is Religion Child Abuse?

- “… religion has always hoped to practice upon the unformed and undefended minds of the young…”

- “… to keep the ignorant in a state of permanent fear.”

- “The only proposition that is completely useless, either morally or practically, is the wild statement that sperms and eggs are all potential lives which must not be prevented from fusing and that, when united however briefly, have souls and must be protected by law.”

- “Mother Theresa denounced contraception as the moral equivalent of abortion…”

- “… it is hard to imagine anything more grotesque than the mutilation of infant genitalia (both sexes, multiple religions). Nor is it easy to imagine anything more incompatible with the argument from design.”

- “… we are talking about the systematic rape and torture of children, positively aided and abetted by a hierarchy which knowingly moved the grossest offenders to parishes where they would be safer.”

- An Objection Anticipated

- (short summary of points made before) “… usefulness of religion is in the past, and that its foundational books are transparent fables, and that it is man-made imposition, and that it has been an enemy of science and inquiry, and that it has subsisted largely on lies and fears, and been the accomplice of ignorance and guilt as well as of slavery, genocide, racism, and tyranny…”

- Religion was bad but were there not terrible atheists like Hitler and Stalin. Alas, religion went to bed with them and were quite the supporters.

- “The very first diplomatic accord undertaken by Hitler’s government… a treaty with the Vatican.”

- In the case of communism, it was there not to fight religion, it was there to replace it.

- A Finer Tradition

- Unbelief has been around as long as belief (see the trail of Socrates)

- “Human decency is not derived from religion. It precedes it.”

- What if we imagined a world where religion didn’t get a hold of the whole world so early on, what if in evolution it had died out, what a world we would have lived in!

- In Conclusion (The Need for a New Enlightenment)

- “… it is better and healthier for the mind to “choose” the path of scepticism and inquiry in any case…”

- “… we are in need of a renewed Enlightenment, which will base itself on the proposition that the proper study of mankind is man and woman.”

I found this to quite the enlightening read and although I don’t think religion and discussion around it will occupy much of my mind-space, I think this is a good introduction to the sins of religion and has given me a better understanding of the space.

How to Get People to Do Stuff

How to Get People to Do Stuff by Susan Weinschenk can be seen as a guide to actionable psychology/behavioural economics. The book is full of tips and trick on how to get people to take action. It’s quite high-level but does reference good sources (e.g. research by Daniel Kahneman). Here are the sections:

- The Need to Belong

- Habits

- The Power of Stories

- Carrots and Sticks

- Instincs

- The Desire for Mastery

- Tricks of the Mind

I skimmed through quite some parts (and did look at the strategies) and now have 9 actionables to do for Queal.

Public Commitment 2019 – Update 1

This year my theme is Connection. And for this, I’ve started laying the groundwork. Just like in the second half of 2018, I’ve been keeping up my updates on the Timeline.

One big thing I’ve done is to follow a course on ‘The Molecular Mechanisms of Ageing‘. The course was quite detailed and I’ve learned some more fundamentals of ageing. I hope to use this knowledge further in

I’ve also followed a course on JavaScript from Codeacademy. The course was quite enlightening, now I should/will start using it (and future courses on PHP and other tools) to upgrade my website, workflows (e.g.

One other actionable was to start using more ‘book’ knowledge in real-life and at Queal, we’ve been implementing two books into our routines. One about Building a Storybrand, and the other about usability testing (Rocket Surgery Made Easy).

Here is my analysis of the goals and various updates:

Goal 1: Make this website a true personal knowledge hub

I’ve added almost all of my back-catalogue from before moving the website over. I’ve also done the same from another wiki-like system I used. Of course, I haven’t yet written all the blogs/reviews I wanted to write.

The goal stays the same and in the coming quarter, I hope to improve search and continue to add new things to the Timeline, reviews, etc. Maybe I will write a long essay, but no guarantees.

Goal 2: Eat good meals that support my well-being 90% of the time

Yes and no. For most meals I eat at home, I’ve made them myself and have put some effort into it. I do see that I eat quite a lot and that includes quite some yoghurt with toppings at work.

I will keep being conscious of this and with Lotte moving in, I think my food will be very good. I might even bring some more to the office (and have some variety next to 2-3 meals of Queal per day).

Goal 3: Keep on improving my house

Yes. I’ve done quite a lot since last writing here. The bathroom is finished (for which I only did the door and painting). I take a bath about once a week and of course it’s very convenient to have a toilet upstairs.

Next to that, Lotte is moving in soon (in a few days of writing this draft, and on the day of publishing) so we’ve been making the house even cooler in the meantime.

The plants have multiplied, we’ve painted some walls, there is a new couch, new bookshelves/library, etc. I like how everything looks and Lotte will take some time to sort out her things.

One other addition is ‘Anne’, the robot vacuum (from iRobot) and he is working quite well. Today I put away all cables he was messing up, so he should be all good to go.

Goal 4: Achieve my fitness goals

The last few weeks I’ve been following my own new plan and that is going very well. I don’t know yet what I can do best in terms of weight-loss versus muscle-gain, but everything looks good for now.

I’m still learning the Snatch and better my Clean & Jerk. I haven’t measured my max yet (will do so in about 7 weeks) and who knows I will be a bit closer to the 90kg.

Goal 5: Write Spero

No. I have this on my daily checklist. Who knows if I will be able to incorporate this in my routine somewhere in the coming months.

Alright that is it for now. I don’t have any new goals at the moment, let’s just be happy with the ones I have and there is enough to be done already.

We Are Legion

“Bob Johansson has just sold his software company and is looking forward to a life of leisure. There are places to go, books to read, and movies to watch. So it’s a little unfair when he gets himself killed crossing the street.

Bob wakes up a century later to find that corpsicles have been declared to be without rights, and he is now the property of the state. He has been uploaded into computer hardware and is slated to be the controlling AI in an interstellar probe looking for habitable planets. The stakes are high: no less than the first claim to entire worlds. If he declines the honor, he’ll be switched off, and they’ll try again with someone else. If he accepts, he becomes a prime target. There are at least three other countries trying to get their own probes launched first, and they play dirty.

The safest place for Bob is in space, heading away from Earth at top speed. Or so he thinks. Because the universe is full of nasties, and trespassers make them mad – very mad.”

This was a fun story and makes me wonder about the second and third book in the ‘

Hmm I do realise that there is a short storyloop at the beginning:

You: Bob, sold his company, etc

Need: to live, and well, you’ve been hit by a bus

Go: you are an AI and you need to figure out how this works

Search: learn to work with the tools you have. Learn more about the world

Find (with the help of a guide): learns how to protect himself, use his new abilities

Take: has to leave the world, and leave all his connections to the world behind

Return: finds his humanity again in VR etc (and returns to Earth to save it later)

Change: he is the new Bob (and Bill, Homer, etc)

You: Bob, AI, cruising through space

Need: to survive from other AIs humans made (but a lot of new goals and subplots are introduced later, and there I think the story might be less good, but also interesting, hmm)

Go: on the way to new resources in other solar systems

Search: energy, place to be safe, make copies, learn skills

Find (with the help of a guide): arrives in other solar system(s), has new skills, improves, finds way to save humanity (after a while at least) (hmm, not really a guide here except from some old knowledge of tv series etc, and some quotes from The Art of War)

Take: not all Bobs survive, humanity? (but not really, because you don’t really care about that too much) (I guess there could/should have been more sacrifice?)

Return: populates the universe, goes back to Earth

Change: he is the new Bob (and Bill, Homer, etc)

I guess another problem I had with the story structure was the lack of closed loops. The threat of the Brazilian probe is still there, there is another type of intelligent civilisation out there (that took the metal out of one system and left some bots there), the humanoids on another planet (and the gorilla’s etc they have to survive from), etc.

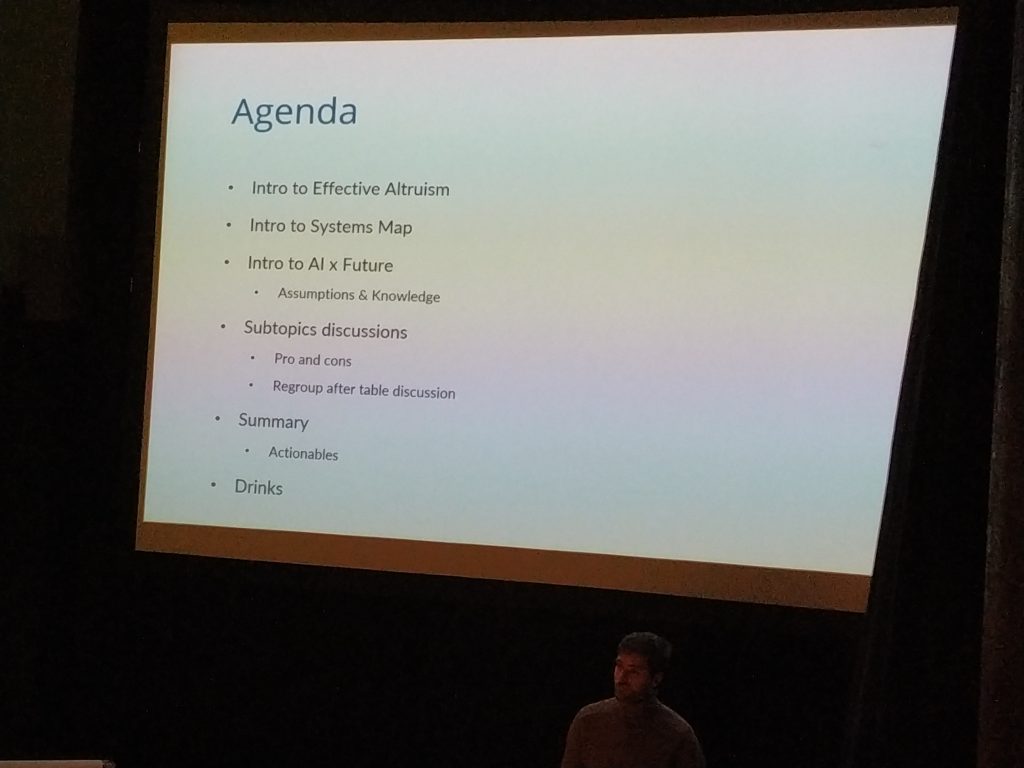

AI x Future

On Wednesday 13rd of March 2019, the EA Rotterdam group had their seventh reading & discussion group. This is a deeper dive into some of the EA topics.

The topic for this event was AI x Future: Prosperity or destruction?

During the evening we discussed how artificial intelligence (AI) could lead to a wide range of possible futures.

Although we gave the instruction of thinking about only one side, of course all 3 groups also considered the opposite point of view from what they had to argue. Here are the questions, presentations, and my personal summary of the night.

We (the organisers of EA Rotterdam) thank Alex from V2_ (our venue for the night) for hosting us, and Jan (also from V2_) for being our AI expert for the evening.

If you want to visit an EA Rotterdam event, visit our Meetup page.

Questions

These were our starting questions:

Bright Lights

- What could AI do for you personally (if AI did/solved X, I could now Y)?

- What could be the effect of AI on energy production? Could AI help prevent/solve/reverse climate change?

- What effect could AI have if fully implemented in health research (protein folding, cancer research, Alzheimer’s)?

- Could AI help us produce the food we need with fewer resources (new crops, cultured meat, fewer pesticides, etc)?

- How would mobility change with self-driving cars, trucks, buses, planes?

- Could we prevent crime from happening (e.g. intelligent camera’s, prediction algorithms)?

- Can AI actually improve our privacy?

- Can we work together with AI to create more together (e.g. chess teams a few years back, a doctor working with AI image system)?

- Will AI make wars obsolete? Or when they happen, more humane?

- Could AI fight loneliness (e.g. robots in nursing homes)?

- Could AI help us learn and remember better (e.g. a ‘smarter’ Duolingo)?

- Will AI be conscious? If yes, could it ‘experience’ unlimited amounts of happiness?

Dark Despair

- Will there be any jobs for us to do in the (near/long-term) future? What can’t AI do?

- Who will enjoy the economic benefits from AI (Google/Facebook shareholders)? Will life become even more unequal than ever before in history?

- Could someone hack the autonomous cars of the future?

- Will we live in a totalitarian (China/Minority Report) state enabled by AI?

- Does AI mean the end of privacy (everything tracked and analysed)?

- Will AI enable more gruesome warfare (more weapons, no-one at the button)?

- Will we lose contact with each other / lose our humanity (e.g. robots in nursing homes, chatbots (in Japan))?

- What if the goals of the AI don’t align with our (humanity) goals? Can we still turn it off? How could we even align these goals – philosophy hasn’t really figured this one out yet!?

- Will AI be conscious? If no, will there still be humans to ‘experience’ the future?

Bonus:

- What are some concrete (future) examples of AI destroying the world?

- Can you think of some counter-arguments for the points you expect the other group to raise?

- Write down the date your group thinks AGI will happen? (don’t show the other team)

AI x Future

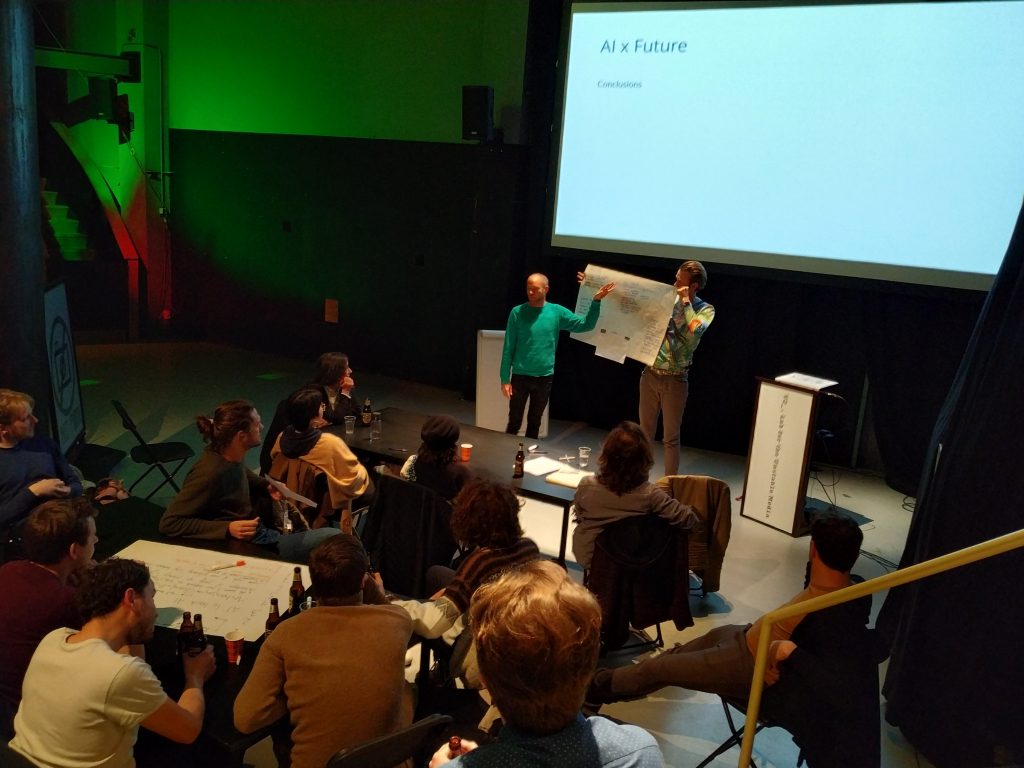

We started the evening with a presentation by Christiaan and Floris (me). In it, we explained both Effective Altruism (EA) and how (through this framework) we look at AI.

After that, we split the group into two and both groups worked on making a mindmap/overview of the questions asked above (download them). This is a summary of both sides:

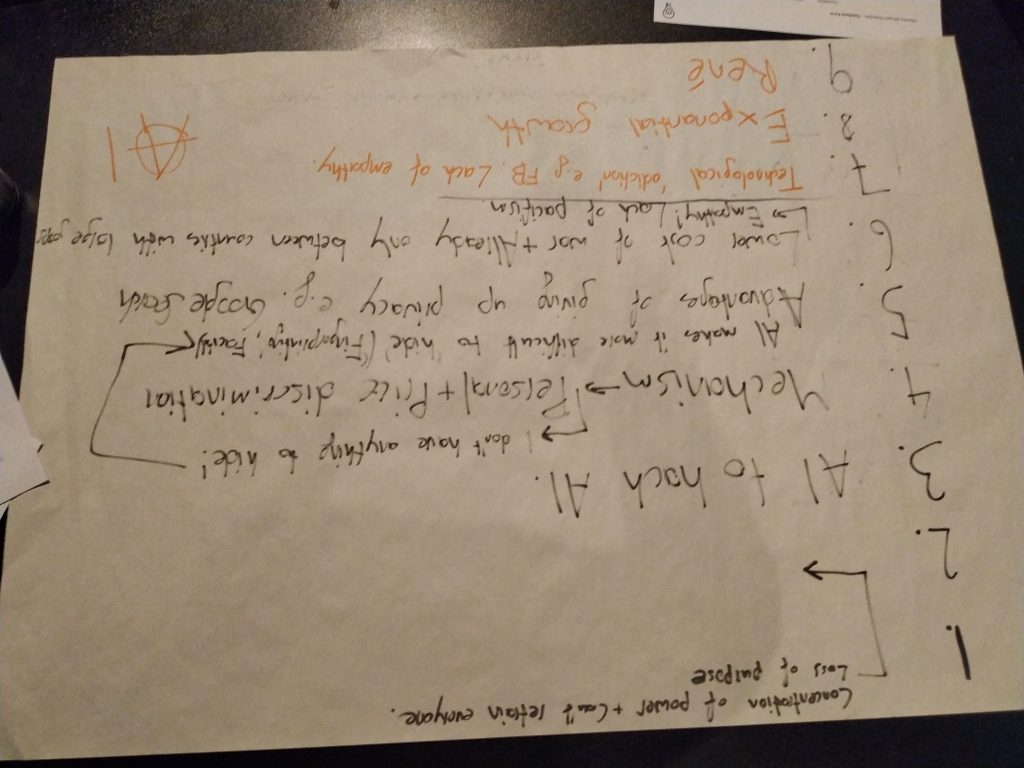

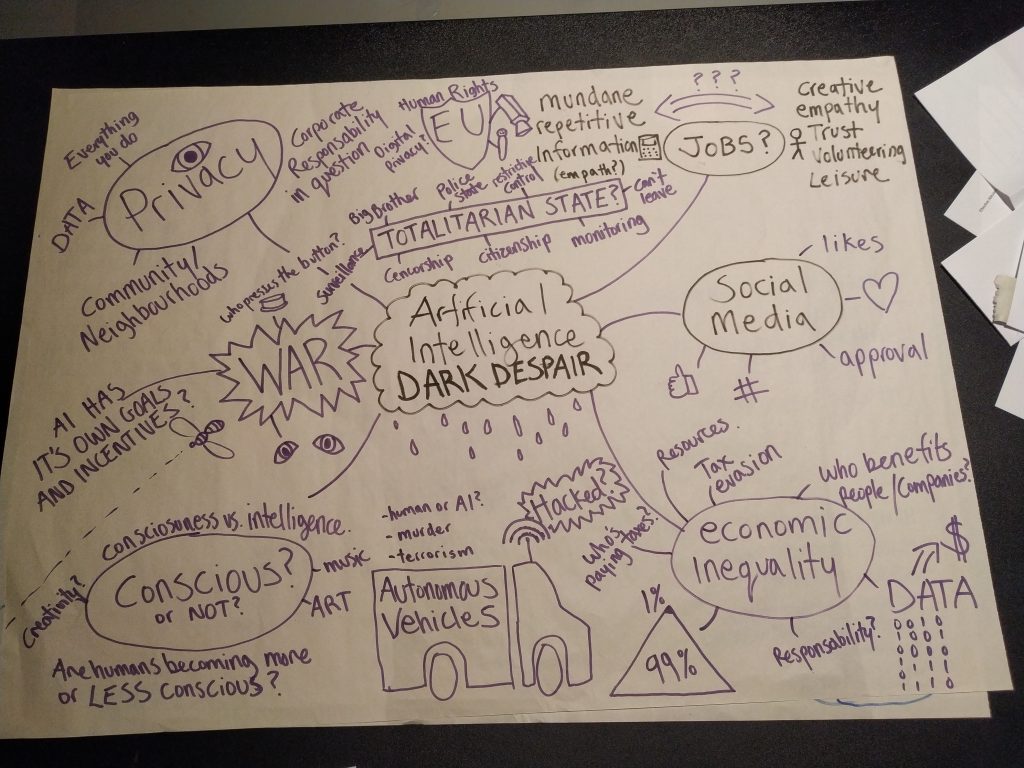

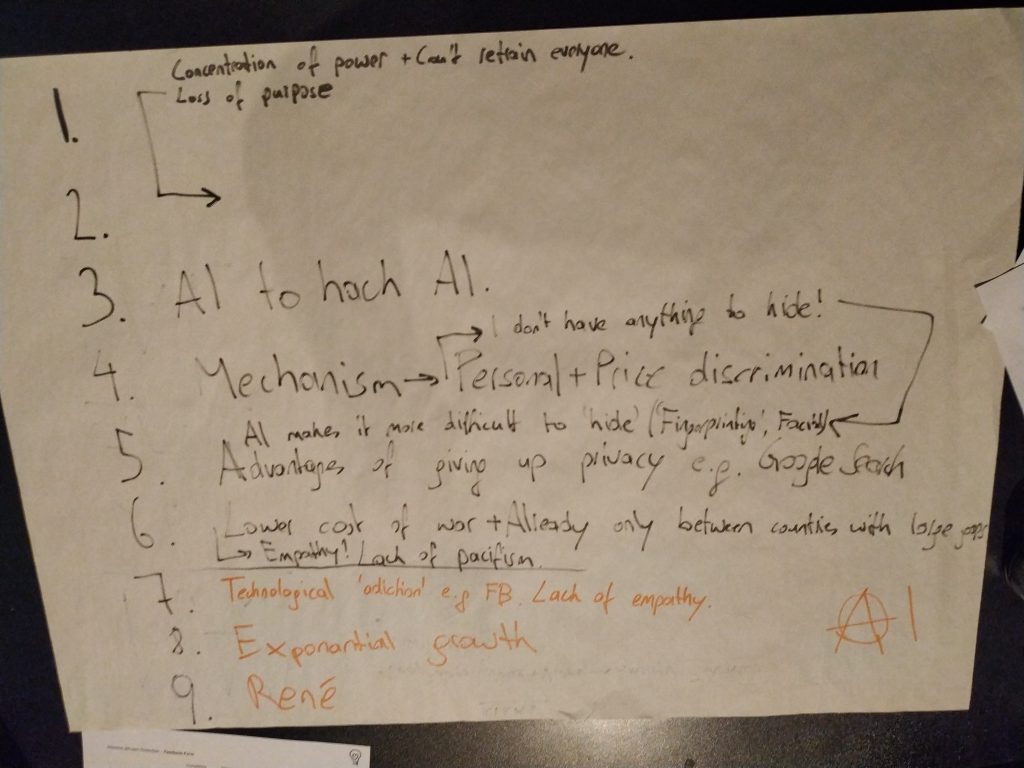

Group 1&2 – Dark Despair

These are the two posters from the Dark Despair groups:

Privacy

In a world with AGI, it could possibly track everything you do. The data that is is disparate systems could be combined and acted upon (not in your interest). Think 1984. You won’t be able to hide, your face will be detected.

Totalitarian State

This leads right into the second point of despair, a totalitarian state. One where big brother is always watching. The EU is making a case for privacy and human rights, can they withstand AGI?

Censorship, like that in China already this day, could lead to total control of the population. You might not be able to leave (e.g. if you’re social credits are too low).

War

Killer bees, but this time for real (or well, artificial, with tiny bombs). It is already real and warfare can become more dangerous and one-sided with an AGI on one side of the battle. Who presses the button? And are the goals of the AGI the same ones as ours?

And what if this makes war cheaper? Instead of training a soldier for years at millions of costs, just fly in some (small) drones that control themselves. Heck, what if they can repair themselves?

Will there be any empathy left in war? If you’re not there, why see the other side as a real human?

Jobs

Will there be any jobs left? A(G)I might leave us without mundane and repetitive tasks (a positive in most cases), but what about

And for who would we be working? Will it be to better humanity or for furthering the goals of the AGI (which might not align with ours).

Social Media

Looking closer at home, learning algorithms (ANI) are already influencing our lives and optimising our time on social networks, making us hunker for likes, hearts, approval. What if Facebook (social media), Amazon (buy this now, watch Twitch), Google (watch Youtube), Netflix, etc. become even better at this? Will we be the fat people from Wall-E?

Economic Inequality

And whilst we’re binge-watching some awesome new series, the AGI is hacking away at tax evasion (which some people are already good at, image the possibilities for an AGI).

Where will the benefits go? Do they go to society (like now via taxes and positive externalities) or will companies (and their executives) rake in all the benefits? With more and more data, who will benefit? How will the benefits ‘trickle-down’?

Autonomous Vehicles

In many US states,

Consciousness

Consciousness and intelligence don’t go hand in hand. Will AGI enjoy art, music, or anything at all? And (surprising to me), we asked, are humans becoming less conscious?

AGI vs AGI

What if you take the time to program the safety into your AGI, and then the other team (read: country) doesn’t and their AGI becomes more intelligent faster, but doesn’t share our goals? Guess who ‘wins’.

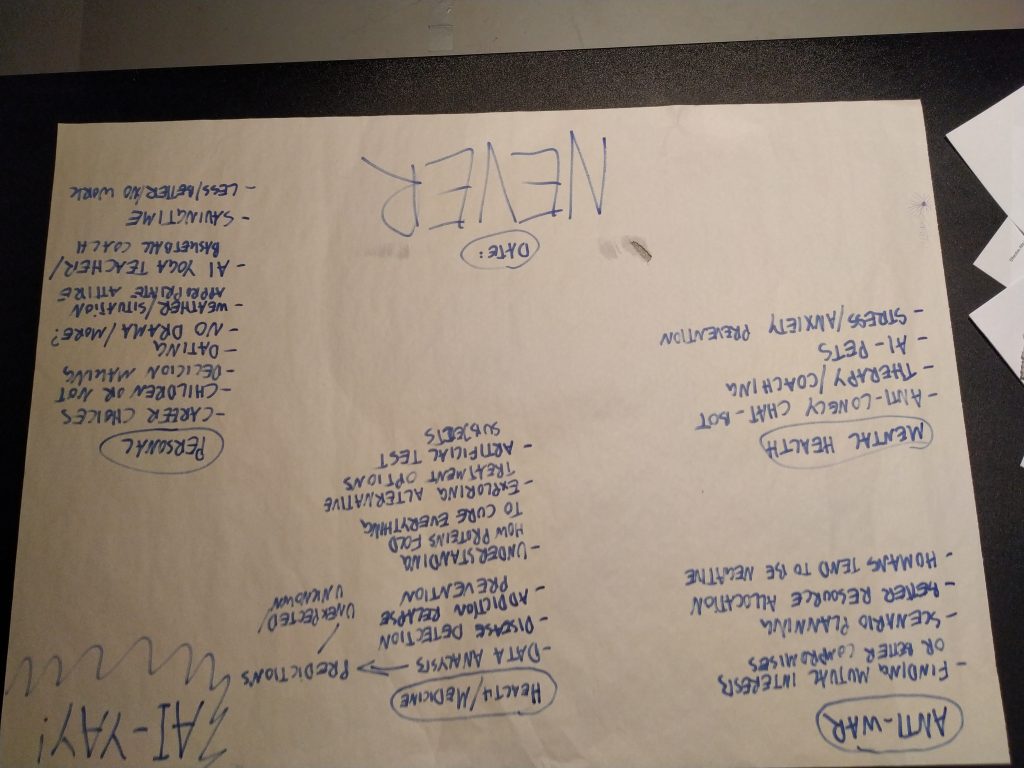

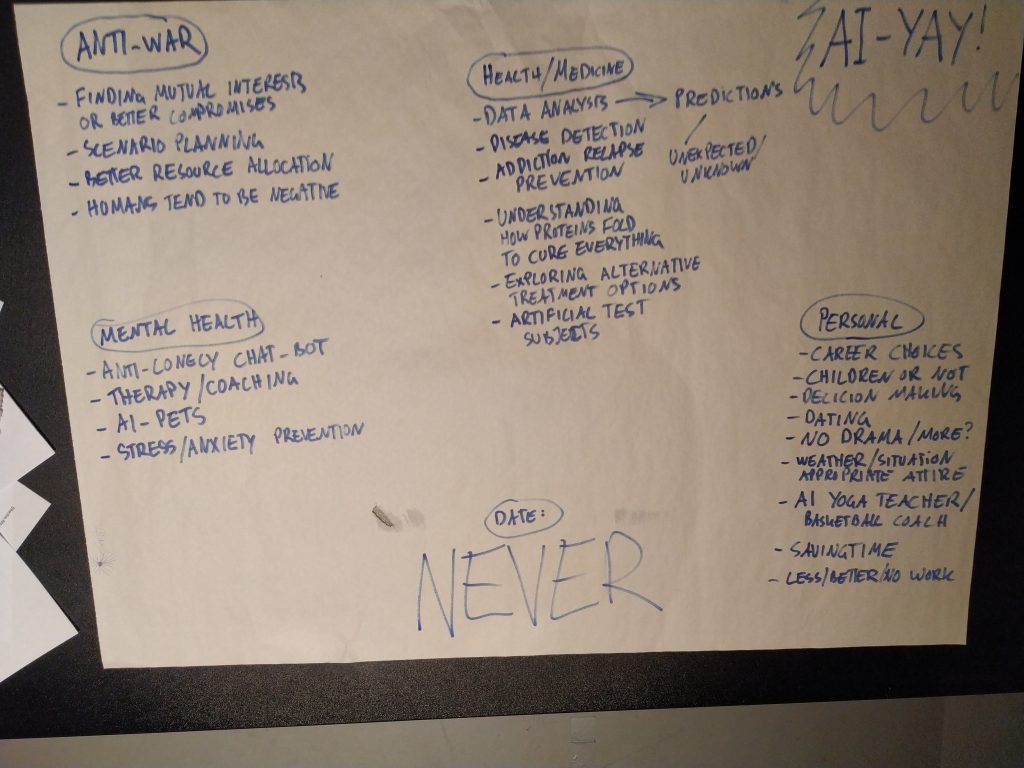

Group 3 – Bright Lights (AI-YAY!)

This is the poster from the Bright Lights group.

Anti-War

AGI could find better compromises and mutual interests. If you could plan scenarios better (show losses from war, have shorter wars, show benefits from cooperating, etc). We pesky humans tend to be quite negative, what if AGI could show us that war is not needed to achieve our goals?

War

Health/Medicine

With better data analysis, we can make better predictions and make better medicine. We can see the unpredicted/unknown and intervene before it’s too late.

We can help people who are addicted to prevent relapse. Data from cheap trackers could help someone stay clean. It could even detect bad patterns and help people before things get too bad.

If we understand how proteins fold (and we’re getting better at it, AlphaFold), we might cure every disease we know. The possibilities of AGI and health are endless and exciting.

Mental Health

A chat-bot could keep you company. If we have more old people (before we make them fit again), an AGI could be their companion.

Chat to your AGI and ditch the therapist. Get coaching and live your best life. Not only for people who have access to both right now. No, therapy and coaching for everyone in the world.

Personal

AGI could help you with making life decisions (think dating simulation in Black Mirror). Choose the very best career for your happiness/fulfilment. Should you have children? Dating, NO MORE DRAMA!

Have your AGI tell you the weather. Have it be your personal yoga teacher, your basketball coach. Let it take away boring work, save you time, and let you live your best life.

Conclusion

Two hours isn’t enough to tackle AI and our possible future. But I do hope that we’ve been able to inspire everyone who was there, and all you reading this, of what the possible future’s there are.

“May we live in interesting times” is a quote I find very appropriate for this topic. It can go many ways (and is doing that already). If, and when, we will have AGI, we will see. Until then, I hope to see you at our next Meetup.

Resources

Videos

Nick Bostrom – What happens when our computers get smarter than we are?

Max Tegmark – How to get empowered, not overpowered, by AI

Grady Booch – Don’t fear superintelligent AI

Shyam Sankar – The rise of human-computer cooperation

Anthony Goldboom – The jobs we’ll lose to machines — and the ones we won’t (4 min)

Two Minute Papers – How Does Deep Learning Work?

Crash Course – Machine Learning & Artificial Intelligence

Computerphile – Artificial Intelligence with Rob Miles (13 episodes)

Books

Superintelligence – Nick Bostrom (examining the risks)

Life 3.0 – Max Tegmark (optimistic)

The Master Algorithm – Pedro Domingos (explanation of learning algorithms)

The Singularity Is Near – Ray Kurzweil (very optimistic)

Humans Need Not Apply – Jerry Kaplan (good intro, conversational)

Our Final Invention – James Barrat (negative effects)

Isaac Asimov’s Robot Series (fiction 1940-1950, loads of fun!)

TV Shows

Person of Interest (good considerations)

Black Mirror (episodic, dark side of technology)

Westworld (AI as humanoid robots)

Movies

Ex Machina (AI as humanoid robot)

Blade Runner (cult classic, who/what is humam?)

Eagle Eye (omnipresent AI system)

Her (AI and human connection)

2001: A Space Odyssey (1986, AI ship computer)

Research/Articles

Effective Altruism Foundation on Artificial Intelligence Opportunities and Risks

https://80000hours.org/problem-profiles/positively-shaping-artificial-intelligence/

80000 hours Problem Profile of Artificial Intelligence

https://80000hours.org/topic/priority-paths/ai-policy/

80000 hours on AI policy (also has great podcasts)

Great, and long-but-worth-it, article on The AI Revolution

Future Perfect (Vox) article on AI safety alignment

https://cs.nyu.edu/faculty/davise/papers/Bostrom.pdf

Ernest Davis on Ethical Guidelines for a Superintelligence

https://intelligence.org/files/PredictingAI.pdf

On how we’re bad at prediction when AGI will happen

https://intelligence.org/files/ResponsesAGIRisk.pdf

Responses to Catastrophic AGI Risk

https://kk.org/thetechnium/thinkism/

Kevin Kelly on Thinkism, why the Singularity (/AGI) won’t happen soon

dhttps://deepmind.com/blog/alphafold/

Deep Mind (Google/Alphabet) on Alphafold (protein folding)

Meetup:

Awesome newsletter (recommended by an attendee):

http://www.exponentialview.co/

Download the full resource list